Concurrent Multi-Model On-Device Inference Using the NeuroMosAIc Processor

Автор: AiM Future

Загружено: 2022-07-21

Просмотров: 237

Описание:

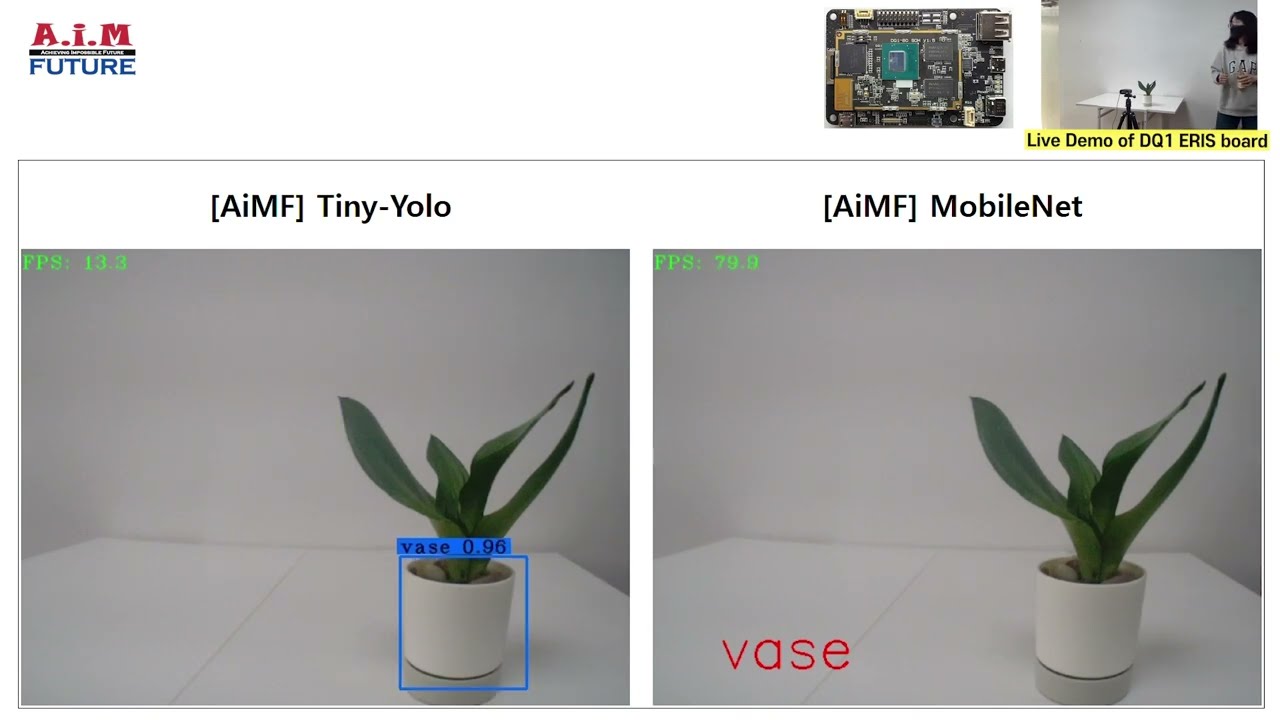

In this video, we demonstrate the flexibility of AiM Future's silicon proven NeuroMosAIc Processor (NMP) IP running on the existing "Eris" embedded system-on-module (SoM) platform. As you will see, our research engineer explains the setup, deep neural networks (DNNs) and displayed results.

The example demonstrates the simultaneous execution of the computer vision models Tiny-Yolo version 3 and MobileNet version 2 on the same chip. These are classic examples of object detection, classification and segmentation algorithms used by a rapidly growing number of edge devices today. Most CPU, GPU and NPU architectures are single-task, single-model execution engines requiring costly context switches or many discrete processors to perform the same task demonstrated by the single SoC seen here.

To be demonstrated on future videos, the flexibility of NMP extends to the execution of multiple model classes, including audio, motion and ambient sensor perception and prediction. These capabilities make it an ideal edge AI accelerator applicable to the boarder artificial intelligence of things, encompassing agriculture, automotive, consumer electronics, 5G communications, robotics, smart manufacturing, warehousing and logistics, and retail.

To learn more, please visit us at www.aimfuture.ai or contact us at [email protected].

Повторяем попытку...

Доступные форматы для скачивания:

Скачать видео

-

Информация по загрузке: