Mastering Stochastic Gradient Descent Implementation in Python from Scratch

Автор: vlogize

Загружено: 2025-09-28

Просмотров: 0

Описание:

Discover a comprehensive guide on how to effectively implement `Stochastic Gradient Descent` in Python. Follow step-by-step corrections and enhancements to ensure optimal performance and convergence to minima.

---

This video is based on the question https://stackoverflow.com/q/63518634/ asked by the user 'KaranJ' ( https://stackoverflow.com/u/3368745/ ) and on the answer https://stackoverflow.com/a/63582793/ provided by the user 'Tristan Nemoz' ( https://stackoverflow.com/u/11482728/ ) at 'Stack Overflow' website. Thanks to these great users and Stackexchange community for their contributions.

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Stochastic Gradient Descent implementation in Python from scratch. is the implementation correct?

Also, Content (except music) licensed under CC BY-SA https://meta.stackexchange.com/help/l...

The original Question post is licensed under the 'CC BY-SA 4.0' ( https://creativecommons.org/licenses/... ) license, and the original Answer post is licensed under the 'CC BY-SA 4.0' ( https://creativecommons.org/licenses/... ) license.

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Mastering Stochastic Gradient Descent Implementation in Python from Scratch

When it comes to optimizing machine learning models, Stochastic Gradient Descent (SGD) stands out as a powerful technique. However, implementing it correctly can be challenging, and small mistakes can lead to oscillating results rather than the desired convergence. This guide walks through a common question regarding an implementation of SGD, identifies errors, and provides an efficient solution.

The Problem

The user inquired about their recently implemented SGD, where they faced issues with the gradient diverging instead of converging to the optimal value, despite using very small learning rates. The confusion revolved around:

Data generation,

The SGD implementation itself,

The settings of hyperparameters.

The complexity of their current approach led to inefficiencies and potentially inaccurate results.

The Solution: Step-by-Step Corrections

1. Data Generation

The initial data generation process was overly complex. Instead of creating random data using nested lists, a far more efficient method was by simply using numpy:

[[See Video to Reveal this Text or Code Snippet]]

2. Setting Up the Model

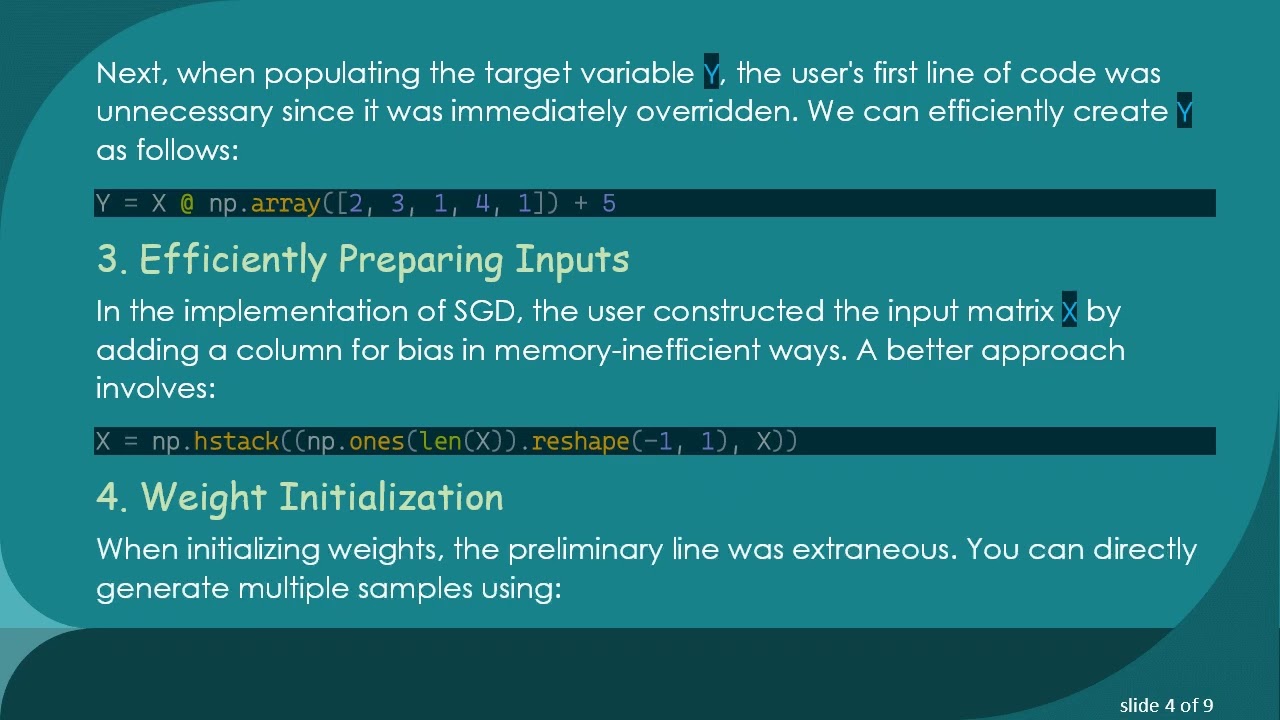

Next, when populating the target variable Y, the user's first line of code was unnecessary since it was immediately overridden. We can efficiently create Y as follows:

[[See Video to Reveal this Text or Code Snippet]]

3. Efficiently Preparing Inputs

In the implementation of SGD, the user constructed the input matrix X by adding a column for bias in memory-inefficient ways. A better approach involves:

[[See Video to Reveal this Text or Code Snippet]]

4. Weight Initialization

When initializing weights, the preliminary line was extraneous. You can directly generate multiple samples using:

[[See Video to Reveal this Text or Code Snippet]]

5. Predictions and Cost Calculation

The original method used nested loops to calculate predictions and costs. This can be simplified using matrix operations:

[[See Video to Reveal this Text or Code Snippet]]

6. Implementing the SGD Loop

As the core of SGD, the loop should effectively update the weights. Instead of random sampling, utilize:

[[See Video to Reveal this Text or Code Snippet]]

Ensure that you properly calculate the new cost after updating weights:

[[See Video to Reveal this Text or Code Snippet]]

7. Final Implementation

Putting everything together, your corrected SGD code would now look as follows:

[[See Video to Reveal this Text or Code Snippet]]

8. Conclusion

By revising the initial code and applying these corrections, you should now see improved convergence in your SGD implementation. Also, regularly verify your results and visualize the cost function to ensure your approach is on track. Remember, small changes in approaches can yield significant results in convergence and stability.

With these corrections, you are well on your way to mastering the technique of Stochastic Gradient Descent in Python!

Повторяем попытку...

Доступные форматы для скачивания:

Скачать видео

-

Информация по загрузке: