Видео с ютуба Multiheadattention

Погружение в многоголовое внимание, внутреннее внимание и перекрестное внимание

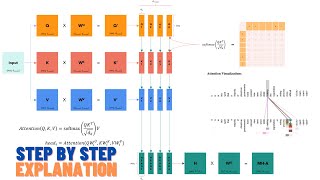

Attention in transformers, step-by-step | Deep Learning Chapter 6

Multi-Head Attention Visually Explained

Visual Guide to Transformer Neural Networks - (Episode 2) Multi-Head & Self-Attention

The Multi-head Attention Mechanism Explained!

Multi Head Attention in Transformer Neural Networks with Code!

Attention mechanism: Overview

Introduction to Multi head attention

MultiHeadAttention

Внимание — это всё, что вам нужно (Transformer) — объяснение модели (включая математику), вывод и...

Self Attention with torch.nn.MultiheadAttention Module

running nn.MultiHeadAttention

Multihead Attention's Impossible Efficiency Explained

L19.4.3 Многоголовое внимание

What is Multi-head Attention in Transformers | Multi-head Attention v Self Attention | Deep Learning

Reshaping MultiHeadAttention Output in TensorFlow

Visualize the Transformers Multi-Head Attention in Action

Multi Head Attention

How Attention Mechanism Works in Transformer Architecture

Implementation of Multi-Head-Attention using PyTorch